A Smarter Rubber Duck: The Duck Talks Back

The classic "rubber duck debugging" technique asks developers to explain code problems to an unresponsive toy duck, but AI transforms this into an interactive dialogue where the duck can actually respond with meaningful insights and suggestions.

Ultimately, programming is a solitary profession. While developers work in teams, drink coffee with their colleagues, and do everything like any other office professional, the core work itself remains deeply individual–much like a writer putting pen to paper. You rarely see two people writing the same story at the same time (let's leave out Xtreme programming for the moment), and the same goes for programming. At the end of the day, you're on your own, sometimes with tough problems.

Developers spend hours in front of their computers, translating ideas into code through their IDEs (Integrated Development Environments), typing millions of characters. While there are different approaches to writing and debugging code, the process remains a complex, almost entirely human endeavour. While discussing challenges with others would be valuable, the solitary nature of programming can make this difficult. Developers often find themselves in "the zone"–a state of deep focus and productivity–and breaking that flow to consult with someone else can disrupt their momentum and the other person's momentum, or perhaps, more common, there is simply no one else to talk to about the particular problem. Ultimately–no one else cares.

But what if you had someone right at your desk to talk through your code with? Someone you could easily bounce ideas off, help you improve your code, debug problems, or work through architectural decisions? Someone who would always be there, ready to listen?

This is where rubber duck debugging comes in.

Rubber Ducking

The below process is from Rubber Duck Developing:

- "Beg, borrow, steal, buy, fabricate or otherwise obtain a rubber duck (bathtub variety).

- Place rubber duck on desk and inform it you are just going to go over some code with it, if that’s all right.

- Explain to the duck what your code is supposed to do, and then go into detail and explain your code line by line.

- At some point you will tell the duck what you are doing next and then realise that that is not in fact what you are actually doing. The duck will sit there serenely, happy in the knowledge that it has helped you on your way."

Rubber duck debugging is really just a method to clarify your own thinking. While you're explaining the problem to the duck, yes duck, you're actually organizing your thoughts and working through the solution yourself. The act of explaining helps you understand the problem better, even though the duck won't respond. It's the process of verbalization that leads to insights.

New Computer Use: Not Just Typing and Clicking

In a previous article, I explored how AI is fundamentally changing the way people interact with their computers, particularly through voice interfaces. While typing and clicking have been our primary means of computer interaction for decades, we're witnessing a significant shift as more users adopt voice commands to work with AI systems. This evolving human-computer interaction represents a notable departure from traditional computing paradigms, and I wrote about it in "What if a computer was a walk in the woods?"

So we are starting to talk to our computers in natural language. And what's more, they are starting to talk back. Does his mean the duck could have its own voice?

AI: The Rubber Duck Talks Back

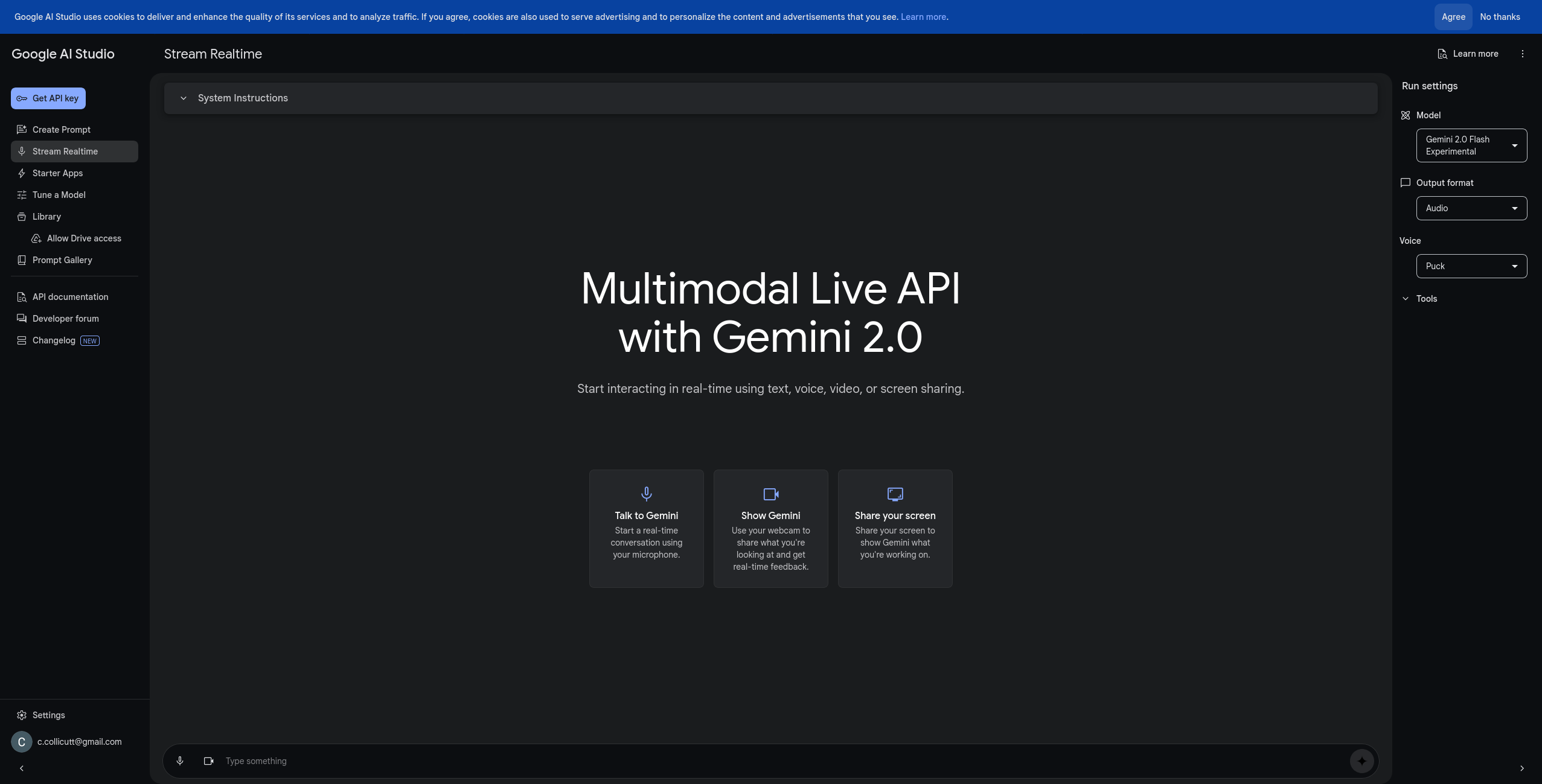

In this Twitter post below, we can see Mr. Ciarla allowing Google's Gemini 2.0 to watch his screen while he is programming, and he can ask it questions. I highly recommend watching the video to get an idea of what is possible today.

In the video, he starts by asking Gemini, which can see his screen, how to optimise his code, and from there they have a short but interactive discussion about how to do just that.

Screen sharing with Gemini 2.0 while coding feels wild 🤯

— Eric Ciarla (@ericciarla) December 12, 2024

It can talk you through anything from architecture decisions to debugging code.

This plus @cursor_ai is the future. pic.twitter.com/U7t4FVm4Bn

Voice interaction with AI isn't limited to Gemini—tools like MacWhisper allow you to "talk" to your computer and interact with various AI language models, or any app really. However, Gemini 2.0 takes this concept further by combining voice interaction with visual understanding. It can not only engage in conversation but also observe and comment on your desktop activities, including the code you're writing, as demonstrated in Mr. Ciarla's video.

In the end, what this means is that the rubber duck can now talk back!

Conclusion

Text-based chat interfaces with Large Language Models (LLMs) have proven their value and have shaken up the IT industry, but they are just the beginning. While keyboard and mouse interaction has been our primary method of using computers, we're entering an era where natural, real-time conversations with AI systems are becoming possible. These AI assistants can observe our work context and provide relevant help. We can now have fluid, context-aware conversations with AI in ways that were previously the stuff of science fiction. Of course, there are many obstacles and other considerations that need to be taken into account before using this kind of technology, but the point is that it is now possible.

Further Reading

Shameless tip o' the hat to https://dev.to/acekyd/are-the-rubber-ducks-getting-smarter-25p for their great title, which I have sort of borrowed here.

https://news.stanford.edu/stories/2014/04/walking-vs-sitting-042414

https://rubberduckdebugging.com/