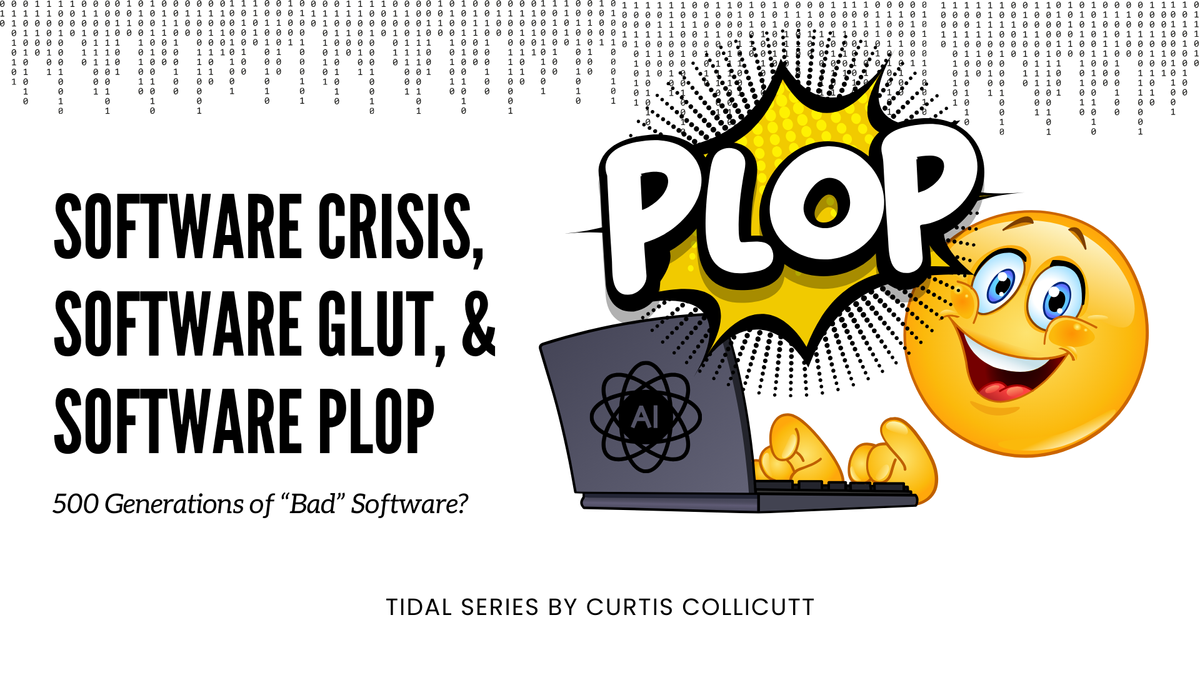

Software Crisis, Software Glut, and Software Plop

Discussion of the increasing complexity facing humanity is unavoidable. We've been in a "software crisis" since at least 1968. Will AI programming, which we might call "plop," make things better...or worse? Are we doomed to 500+ generations of unsafe computers?

I listen to a lot of podcasts and read a lot of articles, and it seems to me that many of them contain a sub-surface level acknowledgement that, overall, worldwide, you know, globally, things are getting pretty complicated. (I should put that on a t-shirt.) Of course, the world is extremely complicated and always has been. But is it getting more so?

Even listening to the Odd Lots podcast, which is what I would consider a great financial and tangential-topic podcast, we run into this issue of increasing entropy.

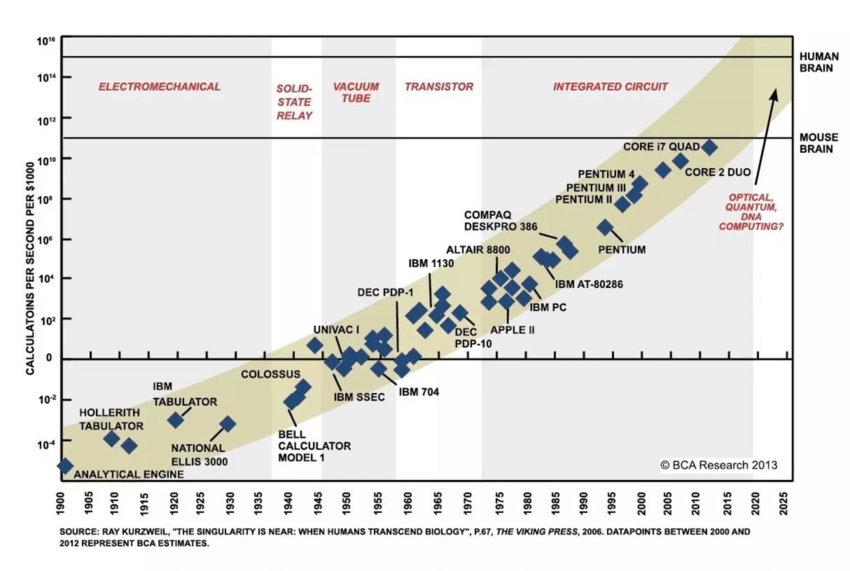

The world gets more complicated as it gets bigger. And it gets exponentially more complicated, and I mean that in the literal mathematical sense because the number of connections grows faster than the number of things–whereas our capability to understand the world, manage it, and make decisions doesn't necessarily grow exponentially. - Dan Davies on the Odd Lots Podcast

I can't even escape complexity in a fun and enjoyable financial podcast!

The Software Crisis

In their recent log/blog/whatever Wyrl-person talks about the "Software Crisis" as defined in 1968 by Edsger Dijkstra. Note this Wryl-person mostly focuses on the problem of abstraction.

Programming models, user interfaces, and foundational hardware can, and must, be shallow and composable. We must, as a profession, give agency to the users of the tools we produce. Relying on towering, monolithic structures sprayed with endless coats of paint cannot last. We cannot move or reconfigure them without tearing them down. - Wyrl - https://wryl.tech/log/2024/the-software-crisis.html

The log/blog/post/entry/text/journal makes a fair point. However, I want to stay away from the abstraction problem, i.e. not have to link to Joel Spolsky, because I've done that too much, and focus on the abundance, history, and complexity components as opposed to abstraction.

The major cause of the software crisis is that the machines have become several orders of magnitude more powerful! To put it quite bluntly: as long as there were no machines, programming was no problem at all; when we had a few weak computers, programming became a mild problem, and now we have gigantic computers, programming has become an equally gigantic problem. - Edsger Dijkstra

Now, decades later, we have an absolutely insane amount of computing power that is going to continue to grow, especially thanks to the recent AI movement, and it is distributed all over the world, jammed into almost every nook and cranny.

Dijkstra continues:

Then, in the mid-sixties, something terrible happened: the computers of the so-called third generation made their appearance. - https://www.cs.utexas.edu/~EWD/transcriptions/EWD03xx/EWD340.html

Amazing how we were thinking about this on the 3rd generation of computers.

Software Plop

Your email inbox is full of spam. Your letterbox is full of junk mail. Now, your web browser has its own affliction: slop. - https://www.theguardian.com/technology/article/2024/may/19/spam-junk-slop-the-latest-wave-of-ai-behind-the-zombie-internet

I don't think there is a software crisis. There is a more of a software glut. - kazinator - https://news.ycombinator.com/item?id=40882583 (yes, I'm linking to a comment on Hacker News, but I do so of sound mind and body)

And your programs are full of plop. - Me

In my last newsletter entry I discussed how Claude 3.5 Sonnet is now the baseline for using GenAI for programming. It is really quite good (as it goes, you know, so far for AI programming). I think this capability means that we will have a lot more code over time, and we already have a lot. Some people might even call it a "software glut", and now with GenAI we may have a lot more. Perhaps a staggering amount more.

We could end up in a situation where we have so much code, just as we have so much (bad) text–the "slop"–that we end up with programming slop, or what I would call plop.

I've already spent a lot of time over the past few weeks having Claude write code, mostly HTML/CSS, Ruby, and some JavaScript, just to build a basic website via a static generator. I can tell you that I already have a lot more code than I would have if I had spent the time to create it myself. In fact, one thing I've noticed is that sometimes instead of including a dependency, I'll just have Claude write a simpler plugin that accomplishes the same thing as including some random Gem. In many ways it's easier to understand that small amount of code than a full Gem. Did I just create my own plop? Only time will tell, and I have to wonder what my future self will think of the code. Will my future self feel the same way my past self did when they booked a 6:30AM flight?

And So...The Software Crisis Continues Crisis-ing

Basically, we've been in some kind of "software crisis" since the late '60s. Can't imagine what this is doing to the parts of our brain we dedicate to programming and technology. The question is, is this crisis getting worse? And what happens when an industry is in a form of crisis for so long? Will we, 100 years from now, look back on this time in computing and be surprised that we made it through all of this, when some of the most secure and heavily vetted and analyzed software of all time still has vulnerabilities?

I wouldn't mind skipping over the parts of the present where computers are just sandboxes for nation-states and we struggle to keep S3 buckets private.

Could we end up with 500 generations of insecure software? I don't think so, I like the idea of GenAI writing some code, but it is worth pondering to make sure we're aware of possible side effects.

Further Reading and Listening