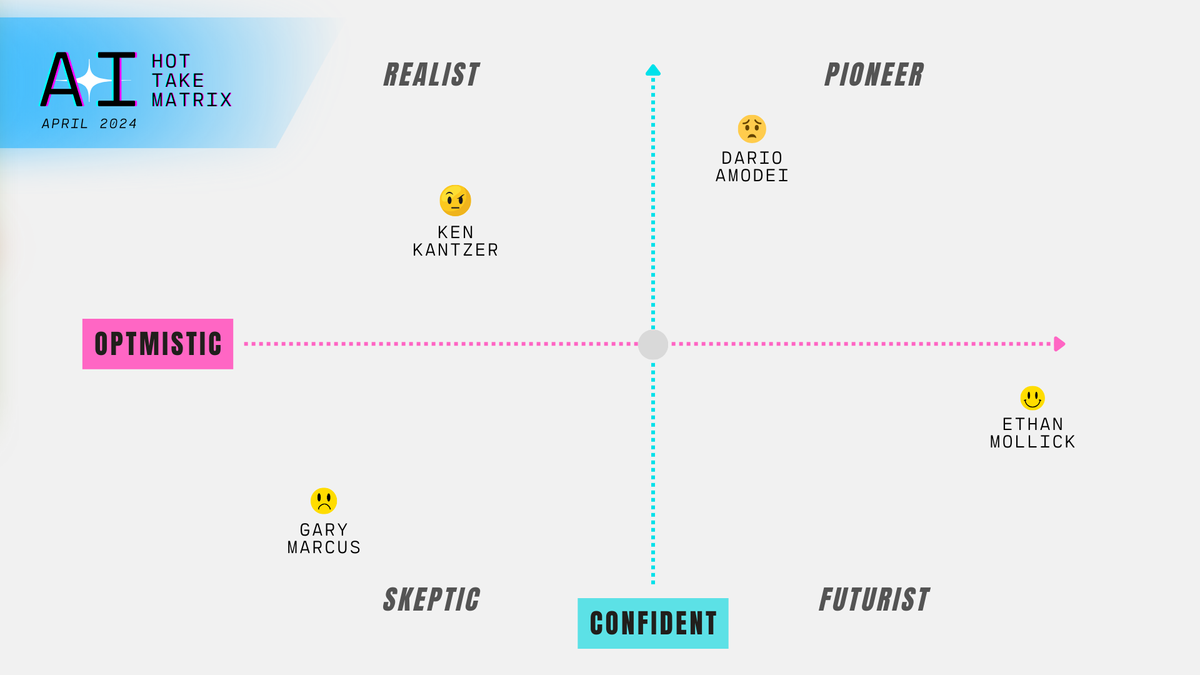

Realists, Pioneers, Skeptics and Futurists: The AI Hot Take Matrix

I had four pieces of AI content decaying in browser tabs, and they were each starkly different in terms of their feel for where AI is headed. I realised that in order to understand what these articles meant for the future of AI, I needed to map these positions out.

There is a never-ending stream of articles, podcasts and takes on the latest advances in artificial intelligence. I have participated in the AI resurgence as best I can, and over the past few weeks I found myself with four different browser tabs lingering, each containing a different piece of AI content. However, each of these "takes on AI" had a completely different spin, a different feel to it, and I began to wonder what I was supposed to do with this starkly differentiated information. Based on these takes, AI could be anything from complete rubbish (actually worse than not having it at all), to the undoing of the world, to forcing governments to introduce universal basic income as the AI does all our work for us.

I realised that in order to understand what these articles meant for the future of AI, I needed to map out the positions and put them on a graph or a matrix, and that's exactly what I did.

First, let's explore the "Hot Take Matrix" itself.

The Hot Take Matrix

Because I had four articles by four quite different people with four completely different takes, I decided a graph might work out the best, where I could map them into four quadrants. What I came up with is below, which I'll describe in a bit more detail.

X-Axis: Level of Optimism About AI

This axis looks at the overall impact of AI on society, from the pessimistic and destructive end (left) to the optimistic views that see beneficial outcomes (increased productivity, solving complex problems such as disease, etc.) on the right.The left side may have a high P(doom) rating.

Y-Axis: Degree of Confidence in AI

Here we assess confidence in the technology as a whole, whether the AI's output is useful and 'truthful' (right-hand side), to the extent that it is not useful due to its tendency to make things up rather than answer negatively. Can AI actually solve problems? Can we wire up agents to do our work for us? Will it truly be helpful? Could it really replace humans for some work and would we trust it to do so?

Realists

Realists find current AI practical and are likely to build solutions and companies based on what is possible today; what can be done now. They find what it can do today very valuable, but because AI is a fast-moving target, they are unlikely to adopt new tools and workflows, knowing that they will soon be obsolete. They take a simplistic and sensible approach to AI. They may not believe that AI will change society in a major way, but rather that it is good, if not great, at certain things that add value. They will cut through the hype and use AI to do real work.

Pioneers

Pioneers may have companies that are creating the "picks and shovels" for the AI revolution. They will often have extreme beliefs about where AI is going. However, they may have qualms about how powerful AI could become and how quickly it will do so. They may feel that they are in a difficult position because they feel that AI has the ability to do as much harm as good, but strangely believe they have little power over the industry itself.

Skeptics

Skeptics have reservations about both the development of AI technology and its ability to have a positive impact on society. They often point to unresolved issues such as ethical concerns, potential unemployment, copyright issues or other negative outcomes. Overall, they may feel that generative AI simply predicts the next word; that it does what it does through little more than advanced statistics. They may believe in AGI but that it is decades away. They may feel that we are heading for a new AI winter and that the AI related financial market is headed for a collapse.

Futurists

Futurists often have incredibly hopeful visions of the impact of AI, seeing its potential to solve major societal challenges. They use AI as much as possible and see massive changes on the horizon. They tend to use the technology rather than build it directly, instead using it as much as possible to enable their own work. They also want to teach others how to use it. They are the adopters of GenAI. However, they may still take practical approaches to AI and be unwilling to use it in certain situations, such as writing.

Meet Today's Crew

Here's a few examples of these personas base on some AI related content that's come across my desk over the last couple of weeks.

The Realist - Ken Kantzer

Are we going to achieve AGI? No. Not with this transformers + the data of the internet + $XB infrastructure approach.

Ken Kantzer had a great post about his experiences after half a billion GPT tokens. He is building a company, Truss, that uses GenAI to do document analysis. In the course of his work, he's come up with a number of practical lessons he's learned. Overall, he feels that when using OpenAI's ChatGPT 4, less is more. Less prompting. Less tools. That "context windows are a misnomer".

Is he optimistic? Yes, but from a practical point of view.

Is GPT-4 actually useful, or is it all marketing? It is 100% useful. This is the early days of the internet still. Will it fire everyone? No. Primarily, I see this lowering the barrier of entry to ML/AI that was previously only available to Google. - https://kenkantzer.com/lessons-after-a-half-billion-gpt-tokens/

Clearly he is a realist, someone who is using these tools to build a business and does not have the resources to build a dedicated in-house AI team, but one of his points is that with OpenAI he doesn't need to.

The Pioneer - Dario Amodei

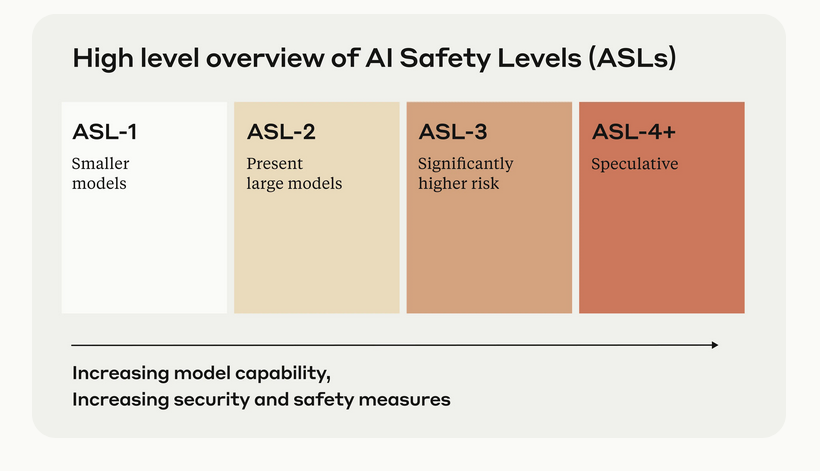

I heavily recommend listening to the entire Ezra Klein Podcast with Amodei. As well, in order to understand what thinks about AI, it would be a good idea to review Anthropic's Responsible Scaling Policy.

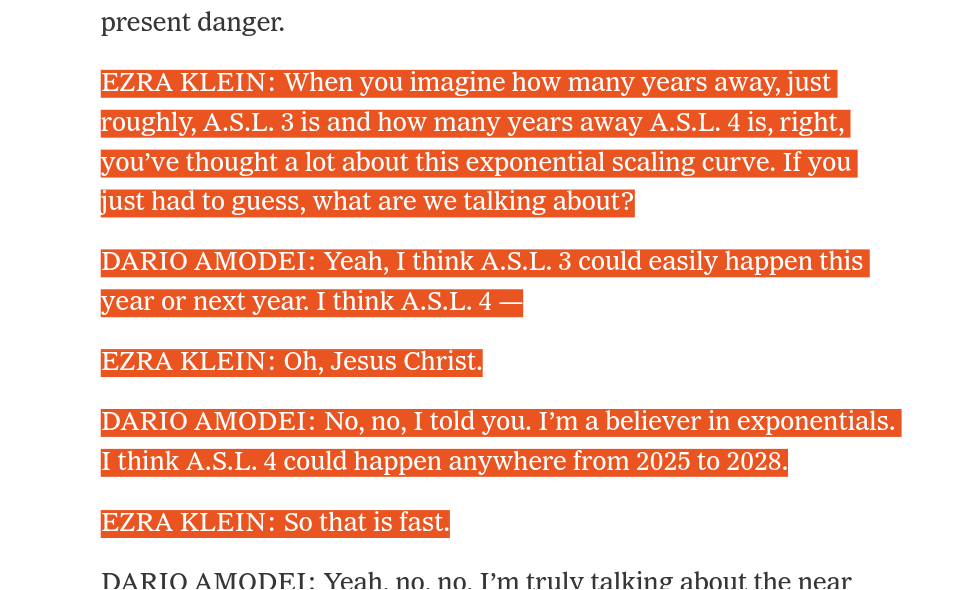

Below we can see that Amodei believes that things are going to happen very, very quickly in the world of AI, where we've got months–not years, not decades, but months–before we see massive, world-altering change. Note where Klein starts swearing.

Amodei is a proponent of "scaling laws", which in high level terms means that the more information and computing power we feed into these models, the more powerful they become.

I and my colleagues were among the first to run what are called scaling laws, which is basically studying what happens as you vary the size of the model, its capacity to absorb information, and the amount of data that you feed into it. And we found these very smooth patterns. And we had this projection that, look, if you spend $100 million or $1 billion or $10 billion on these models, instead of the $10,000 we were spending then, projections that all of these wondrous things would happen, and we imagined that they would have enormous economic value. - https://www.nytimes.com/2024/04/12/podcasts/transcript-ezra-klein-interviews-dario-amodei.html

Amodei also suggests that A.S.L level 3 could be very soon, where we have a considerable increase in the risk of the use of AI, even to the point of it being autonomous.

ASL-3 refers to systems that substantially increase the risk of catastrophic misuse compared to non-AI baselines (e.g. search engines or textbooks) OR that show low-level autonomous capabilities. - https://www.anthropic.com/news/anthropics-responsible-scaling-policy

The Futurist - Ethan Mollick

The current best estimates of the rate of improvement in Large Language Models show capabilities doubling ever 5 to 14 months.

- Article/Podcast - https://www.nytimes.com/2024/04/02/opinion/ezra-klein-podcast-ethan-mollick.html and https://www.oneusefulthing.org/p/what-just-happened-what-is-happening

- https://twitter.com/emollick

- Substack - https://www.oneusefulthing.org/

- Book - https://www.moreusefulthings.com/book

Mollick is very positive about the current state of AI, and only thinks its is going to get better.

Here he talks about how we don't know what LLMs can or can't do, and how they can solve surprisingly difficult problems if we keep at it.

In fact, it is often very hard to know what these models can’t do, because most people stop experimenting when an approach doesn’t work. Yet careful prompting can often make an AI do something that seemed impossible. For example, this weekend, programmer Victor Taelin made a bet. He argued that "GPTs will NEVER solve the A::B problem" because they can’t learn how to reason over new information. He offered $10,000 to anyone who could get an AI to solve the problem in the image below (he called it a “braindead question that most children should be able to read, learn and solve in a minute,” though I am not sure it is that simple, but you should try it.) - https://www.oneusefulthing.org/p/what-just-happened-what-is-happening

As well, he works with every model and understands which ones are working best for him at this time.

The biggest capability set right now is GPT-4, so if you do any math or coding work, it does coding for you. It has some really interesting interfaces. That’s what I would use — and because GPT-5 is coming out, that’s fairly powerful. And Google is probably the most accessible, and plugged into the Google ecosystem. So I don’t think you can really go wrong with any of these. Generally, I think Claude 3 is the most likely to freak you out right now. And GPT-4 is probably the most likely to be super useful right now. - https://www.oneusefulthing.org/p/what-just-happened-what-is-happening

Interesting how he suggests that Claude 3 is capable of freaking people out, but Kantzer doesn't think it adds much value over ChatGPT 4. Who's right?

The Skeptic - Gary Marcus

If we really have changed regimes, from rapid progress to diminishing returns, and hallucinations and stupid errors do linger, LLMs may never be ready for prime time. Instead, as I warned in August, we may well be in for a correction. In the most extreme case, OpenAI’s $86 billion valuation could look in hindsight like a WeWork moment for AI.

I subscribe to Gary Marcus' substack. It's a bit depressing for me to read sometimes, but I keep at it to try to have a varied and skeptical view on AI.

Even just looking at the titles of his posts we can get a good feel for where he stands.

- "Evidence that LLMs are reaching a point of diminishing returns — and what that might mean" - This one is particularly interesting because he is refuting some information that Ethan Mollick provides.

- "Superhuman AGI is not nigh"

- "$10 million says we won’t see human-superior AGI by the end of 2025"

- "Breaking news: Scaling will never get us to AGI "

In the post that I'm specifically referencing here, the one where he refutes some statements by Mollick, he says the below.

The study Mollick linked to doesn’t actually show what he claims. If you read it carefully, it says literally nothing about capabilities improving. It shows that models are getting more efficient in terms of the computational resources they require to get to given level of performance, “the level of compute needed to achieve a given level of performance has halved roughly every 8 months, with a 95% confidence interval of 5 to 14 months.” But (a) past performance doesn’t always predict future performance, and (b) most of the data in the study are old, none from this year. - https://garymarcus.substack.com/p/evidence-that-llms-are-reaching-a

Again...who is right? Who can see the future?

Hot Take Matrix

I'll keep updating this matrix as I come across new and interesting "hot takes." We're all just making bets on the future of AI here!