Reframing Risk as Trust

Is it risks we're looking for? Finding a million little pieces of risk isn't that useful, what we are actually doing is building trust in our systems. Maybe we should admit that?

Lately I've been playing around and testing software like Garak, which is a tool for finding vulnerabilities, of sorts, in Large Language Models (LLMs).

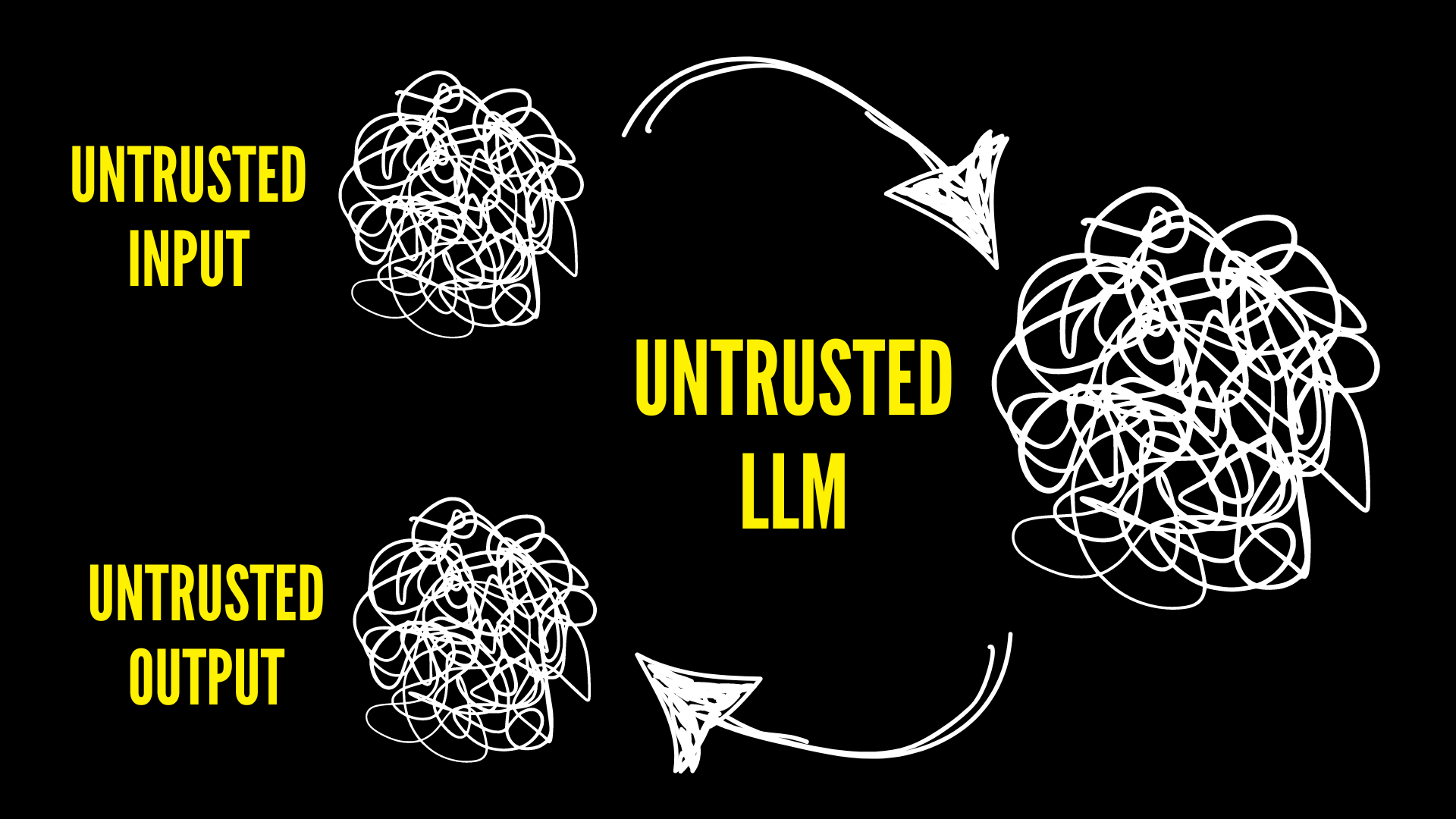

LLM security is a little weird, in part because of the newness of them, but also because untrusted input goes into the LLM, which itself is a giant untrusted blob of interconnected nodes, and it returns untrusted output. The whole process seems kind of bonkers. But that is what almost every application is–untrusted input, untrusted program, untrusted output. So with a tool like Garak, or any vulnerability scanner, or "risk finder" you get a report of all the risks that are part of what was scanned, but what are we really doing? I think we're building trust, not counting risks.

Trust vs. Risk

Security teams have focused too much on reducing risk. Given the quickly changing technology landscape, they might not have had a choice. However, given recent breaches, it’s important to focus on establishing trust both internally and externally. - https://franklyspeaking.substack.com/p/security-needs-to-shift-away-from-0d2

In the newsletter quoted above, author Frank Wang (who's articles are well worth reading) makes a good point about how we've focused too much on reducing risk and not enough on building trust. In this article, however, I'm talking more about how we seem to be collecting a million little risks and looking at the world from that perspective, when we should be focusing on building trust, and that ultimately that is likely what we are unconsciously doing.

In cybersecurity, we spend a lot of time talking about, investigating, and ultimately collecting risks. We build up thousands and thousands, if not millions, of tiny little risks, like common vulnerability exposures (CVEs) in vulnerability management. We take those risks and put them in graphs and spreadsheets, collecting, even trading them, fanatically like baseball cards. But we can't really deal with all of those little individual risks. It's just not possible. And yet we keep doing it.

The reality, I believe, is that what we are doing under the covers is building trust, not collecting risk. We're collecting all these little risks because we want to try to limit them, reduce their count down to some kind of "magic number," and at that point we feel like either 1) we can trust the system, the application, to some extent or 2) we feel like we can defend the risk level if we have to, say to our organizations leadership.

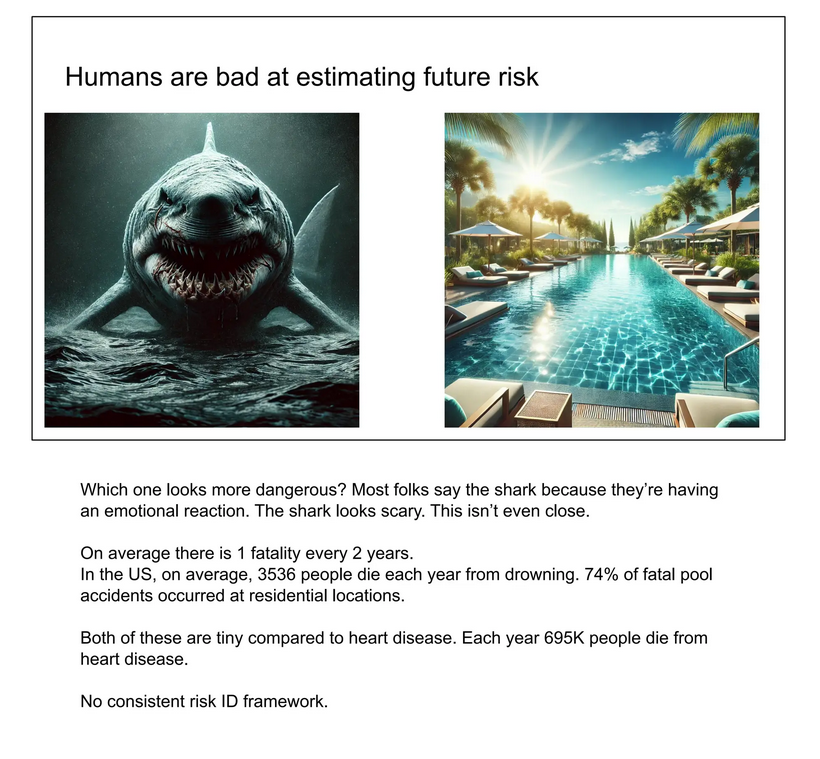

Humans are Bad at Assessing Risk

The lesson, in the broadest sense, is that humans are bad at assessing risk. - https://duo.com/decipher/humans-are-bad-at-risk-assessment-and-other-stories

Most people are more afraid of risks that can kill them in particularly awful ways, like being eaten by a shark, than they are of the risk of dying in less awful ways, like heart disease—the leading killer in America. - https://www.schneier.com/essays/archives/2008/01/the_psychology_of_se.html

Most of the time, when the perception of security doesn’t match the reality of security, it’s because the perception of the risk doesn’t match the reality of the risk. We worry about the wrong things: paying too much attention to minor risks and not enough attention to major ones. We don’t correctly assess the magnitude of different risks. - https://www.schneier.com/essays/archives/2008/01/the_psychology_of_se.html

For many reasons, humans are bad at assessing risk. We worry about the wrong things, and this is reflected in the way we manage cybersecurity. But to fully understand how we manage risk, we need to understand human psychology, which is a topic for another day. Best read the linked articles above, especially Schneier's piece. However, clearly that plays a key role in how we think about risk–we can't escape the human side.

LLMs and Trust

I think understanding the risks of LLMs is a good example of dealing with modern techno-risks, because currently LLMs are a "black box" blob of hidden switches–we have no window into them. So what we are doing when we run tools like Garak is actually building trust. Instead of getting a report of CVEs, we get a similar report of what risky behavior the LLM is capable of, and through that report we can start to build trust in the LLM as a whole. We cannot simply update dependencies in the LLM to remove a CVE, that paradigm is not how we deal with LLMs.

Of course, we want to avoid anthropomorphizing LLMs, which could be a problem if we try to apply some kind of "trust" metric to them, but my point is that ultimately what we are doing is not counting risks, but building trust. Getting these risks to zero is not possible, and in the effort we may cloud our true goal.

Further Reading