AI Platform Risk is Real

Commercial AI companies are leapfrogging each other in terms of LLM capabilities. These companies are also risky businesses that could fail. Other startups are building tools on top of these new platforms the end user could come to rely on, such as the Cursor IDE, so there are layers of exposure.

There is a certain segment of the programming and application developer population, myself included, that is enamored with tools like Cursor, tools that can actually write code– honestly really well–based on natural language input. It's pretty incredible in some ways: one can simply ask for some complex application code and Cursor will go and write it.

But these tools are new. Large language models are new. The big companies behind them, OpenAI, Cohere, Anthropic, etc, etc–they are also new. The products they make, and the products based on them, are new. The teams building the APIs are new. These companies are constantly leapfrogging each other in performance and capability, and sometimes the gulfs between the releases, between the competing companies, are considerable, if only for a short while.

As well, relatedly, I believe in the idea of "phase change" or "emergent" properties in these LLMs, which is not as grandiose as it may sound, but simply the concept that LLMs can rapidly improve in certain capabilities to the point where earlier models seem virtually useless for the task at which a newer model is much more capable. Now, this may not be "emergent", it may not be a "phase change", in the true definition of those terms, but the feeling you get when one model's API experiences increased failure rates and the tools you use fall back on another, less capable model, perhaps from a different company altogether, is similar to digging a big hole and having your shovel taken away and being given a dessert spoon instead. Suddenly the job is that much harder.

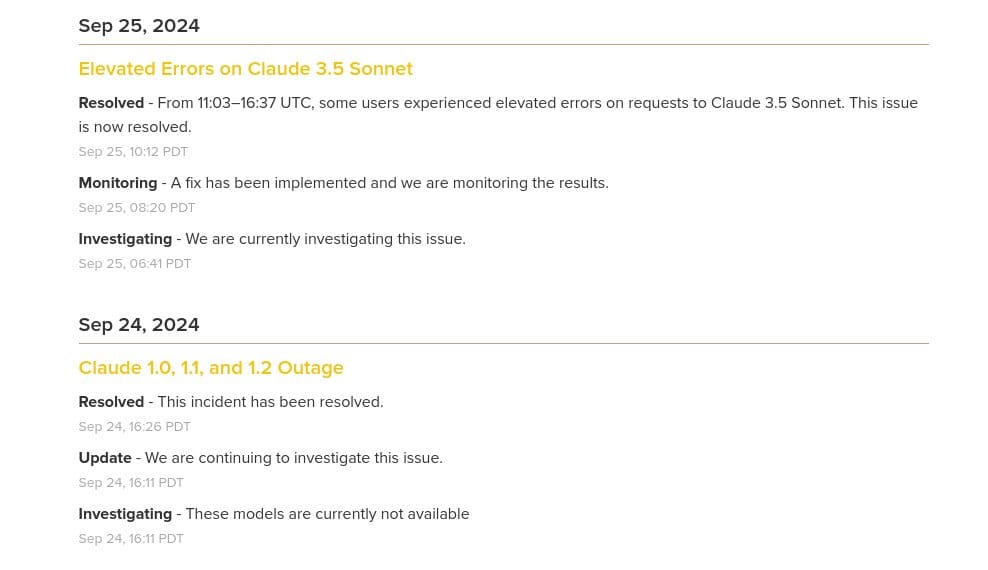

Elevated Error Rates

As I mentioned earlier, there are a lot of people using the Cursor IDE to build applications and generally experiment with AI code generation.

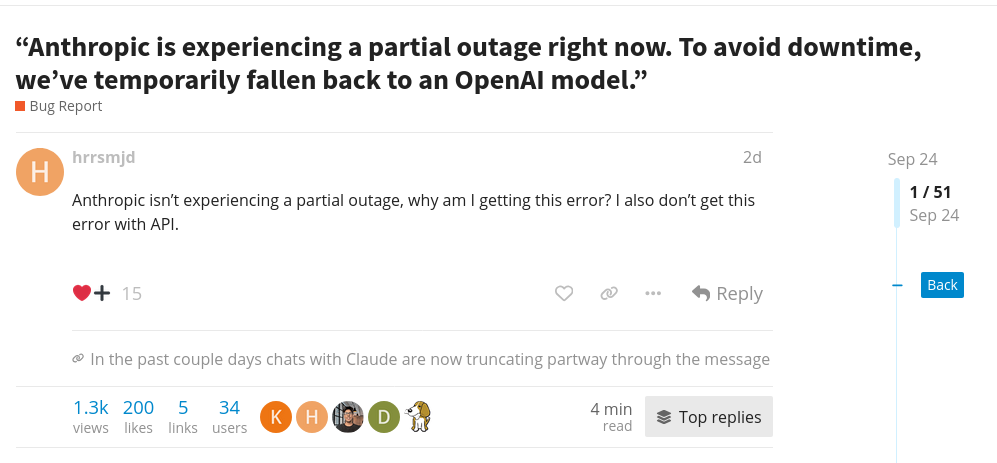

However, recently Anthropic, which Cursor uses as its backend LLM API, started experiencing "elevated error rates" and thus Cursor fell back on OpenAI's models.

Here's a forum post on the subject.

During this period of "elevated error rates", most people using Cursor found themselves "back in the stone age" in terms of how well OpenAI can code vs. Anthropic (at least currently–things may have even changed since I wrote this, as again, these companies are constantly leapfrogging each other).

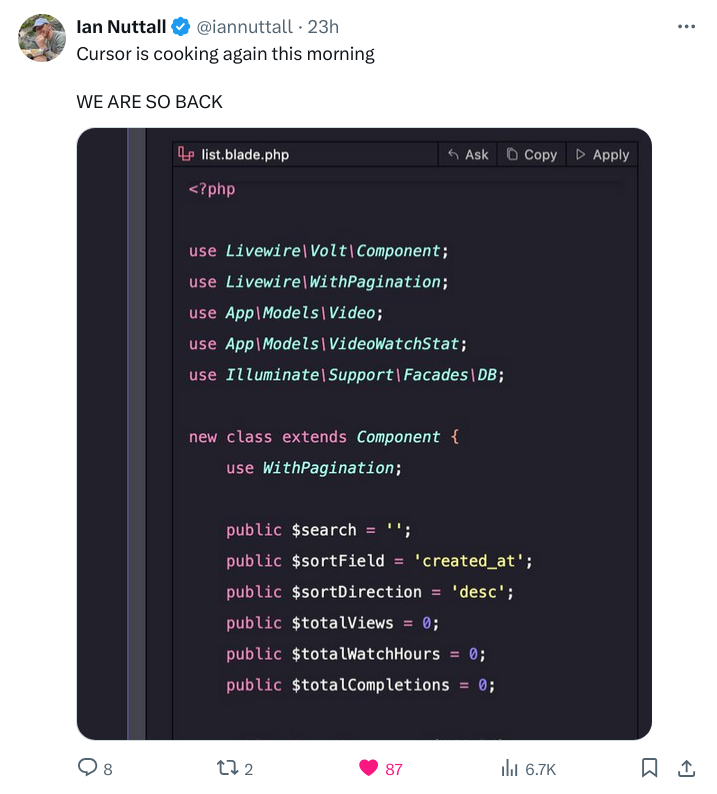

And then when Anthropic came back...

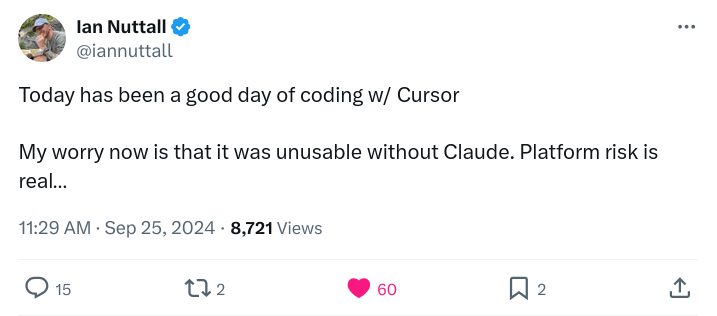

Platform Risk Is Real

One problem with using generative AI platforms like Anthropic to build applications is what happens if Anthropic goes out of business, or has a massive failure, or somehow breaks their model and can't fix it, or changes their model, "nerfing" it, so that it no longer performs how it used to. Or maybe the government legislates them out of existence. Or OpenAI buys Anthropic and kills Claude (sounds terrible with the human names). The (business) risks are many.

If any of these risks come to fruition, you or the company you've built on the code that AI helped you write can no longer update, add to, or fix that code. Or you have to go back to manually writing and maintaining a lot of code that an AI tool created...code that you and your team may not even understand. Or maybe you need to deliver a feature to a customer, but can no longer do so in a timely manner. If this change is permanent, you may need to hire many more people, or reduce the amount of features and other capabilities you are adding, which could make the underlying business plan unfeasible. This is a significant business risk.

Or, instead of business risk, perhaps it's just not as much fun to code anymore, now that one has gotten so used to Cursor + Claude. (Although, of course, some people love and adore the act of programming, all the way back to assembly language–and I thank them for it!)

The reality is that right now, today, September 26, 2024, Anthropic's LLM is so much better at programming with Cursor that it is basically (subjectively) useless without it. Will other LLM vendors catch up? Or could we be headed for a future where there is a monopoly, or perhaps a duopoly, around AI and programming? Also, even if there is more than one "good at programming" LLM, the differences in the styles of the LLMs could be substantial, substantial enough to drastically change how a tool like Cursor works.

As well, thus far I have been talking about the risks to developers and companies using Cursor, which is using other models behind the scenes, but there is risk to the Cursor company itself–for example what if for some reason Anthropic cut off their access? Every business has risks, some risks bigger than others.

Will LLMs become so commoditized that switching will make no discernible difference? Or, as in the current example of Cursor + Claude vs. Cursor + OpenAI, will we see significant degradation when switching, creating a considerable platform risk?

More questions than answers, I'm afraid.

PS. Success is a Risk Too